AI Today – We’ve Come a Long Way

Judy Davies, Vice President of Global Marketing Communications, Advantest

Artificial intelligence (AI) has made amazing technological leaps since what some consider its first implementation: the first programmable digital computer, invented in Germany by Konrad Zuse in 1941. Since then, of course, AI has made amazing technological leaps, while at the same time incurring misconceptions on the part of many regarding its potential uses. Let’s take a look at the current state of AI, and how it’s being enabled by continued evolution of semiconductor technology.

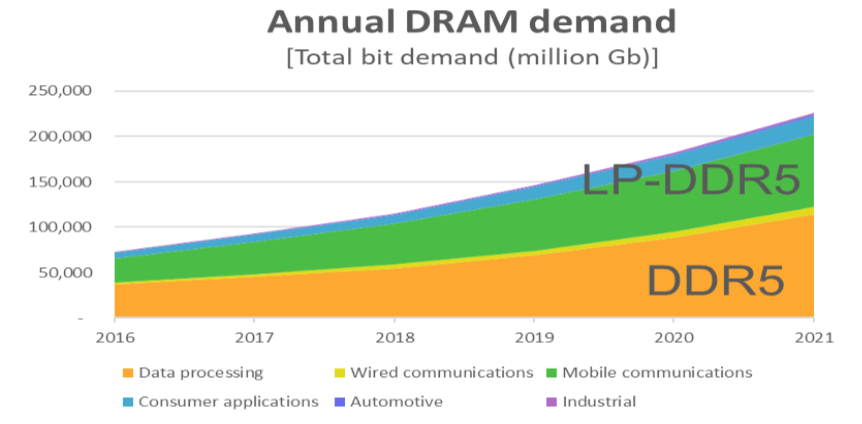

Today’s AI systems comprise advanced software, hardware and algorithms, performing tasks that normally require human intelligence, such as independent learning and problem solving. AI-powered devices can crunch huge amounts of information in a short period of time. The availability of high-speed, low-latency mobile data allows users to access information quickly without a large power requirement, enabling real-time content streaming while making possible a growing range of applications, from augmented and virtual reality (AR/VR), to cloud computing, to “smart everything.”

Cognitive engines are being used by government agencies from municipal police departments to the CIA to sift through and perform intricate analyses of myriad information collected on a daily basis – ranging from fingerprints to images captured on police body-cams. Similarly, California firefighting efforts have benefited from the use of drones to gather on-site information in the midst of raging wildfires, relaying the location of hot spots and overall fire movement. This is particularly valuable when a fire is burning in an area of rough terrain, helping agencies map out the best plan of attack.

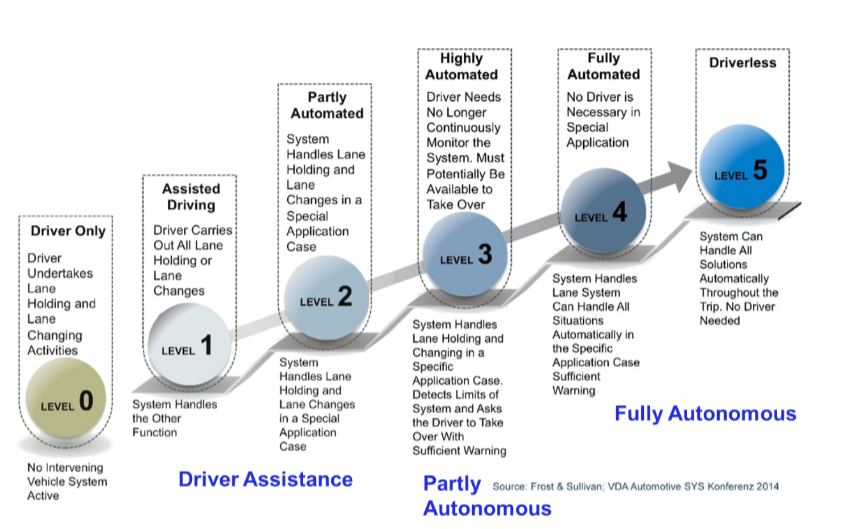

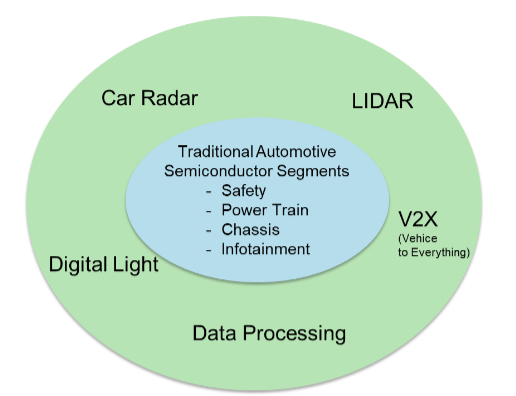

But AI is also being used on a more personal level, in human/machine interfaces. These range from ATMs and smartphone GPS, to home-automation devices such as Amazon Echo or Google Home, to our increasingly interactive vehicles. According to market research firm IC Insights, automotive electronics will be the fastest growing IC market segment through 2021. Companies ranging from Porsche to Dyson (best known for its high-end vacuum cleaners and personal electronics) are working to apply this processing power for all-electric and, soon, fully autonomous, self-driving vehicles.

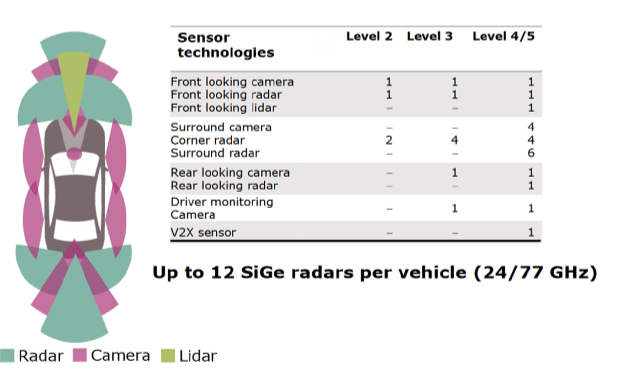

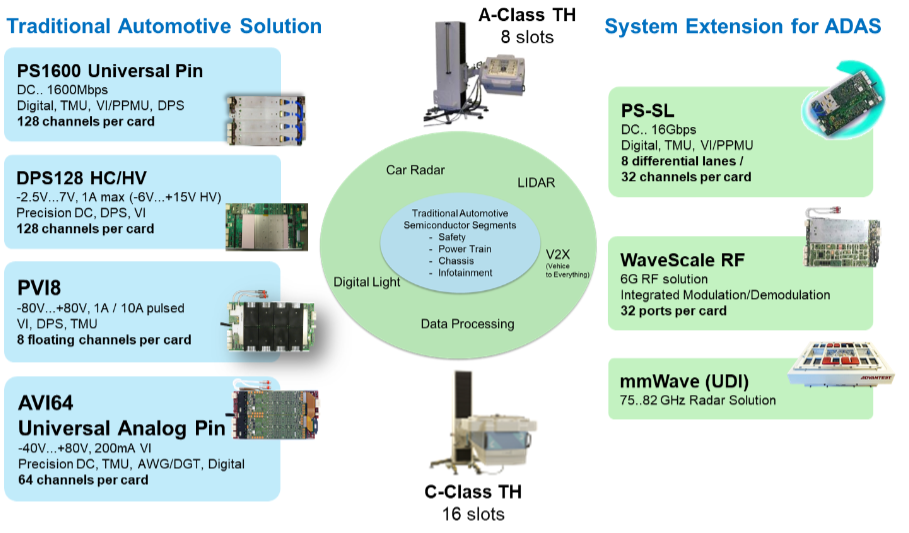

At the heart of a host of these human/machine applications is the ongoing march of semiconductor technology progress, enabling new functionality for new markets. Sensor technology is critical to the development of self-driving cars. A major challenge is equipping vehicles to determine when a turn can safely be made if pedestrians are present. Driverless cars can be made to recognize road signs and proximity of other vehicles, but people entering crosswalks create a unique challenge – the car may sit there indefinitely, waiting until no movement at all can be detected. By viewing autonomous cars as essentially mobile sensors and part the connected “Internet of Everything,” the chip industry can speed its efforts to develop solutions that overcome these hurdles while also enabling new business models.

Illustrating its diversity, AI also has applications in medical markets – for example, creating opportunities for those missing limbs to experience improved mobility. Enabled by smaller, more efficient microelectronics and longer battery life, AI can be combined with advances in medical knowledge and kinesiology to achieve next-generation developments in prosthetics.

Companies such as HDT Global, which partners with DARPA, and Touch Bionics, maker of the i-limb prosthetic hand, are making the most of improvements in microprocessors, software and battery technology to usher in a new era in bionics. Using semiconductor technology, researchers at Brown University implanted a sensor in the brain of a 58-year-old quadriplegic woman. Electrical signals from neurons in her motor cortex were able to command a computer-controlled prosthetic arm to grasp a bottle with the woman’s right hand and bring it to her mouth. A number of further advances in brain-controlled prosthetics are on the horizon, based on presentations given last fall at Neuroscience 2017, the annual meeting organized by the Society for Neuroscience.

Another use of AI revolves around intelligent harvesting of ambient energy from a wide range of common external sources, including photons, geothermal heat and kinetic energy, and harnessing it to improve our human experience through mobile and wireless electronics. An example, demonstrated through technology incubator Silicon Catalyst, harvests body heat to power smart watches and other devices. It does this by leveraging the difference between body temperature and the surrounding air; the larger the temperature disparity, the more energy is available. If the power can be channeled in sufficient quantity to drive all the functions on a smart watch, the wearer could theoretically generate electrical power on the move, anywhere he or she goes.

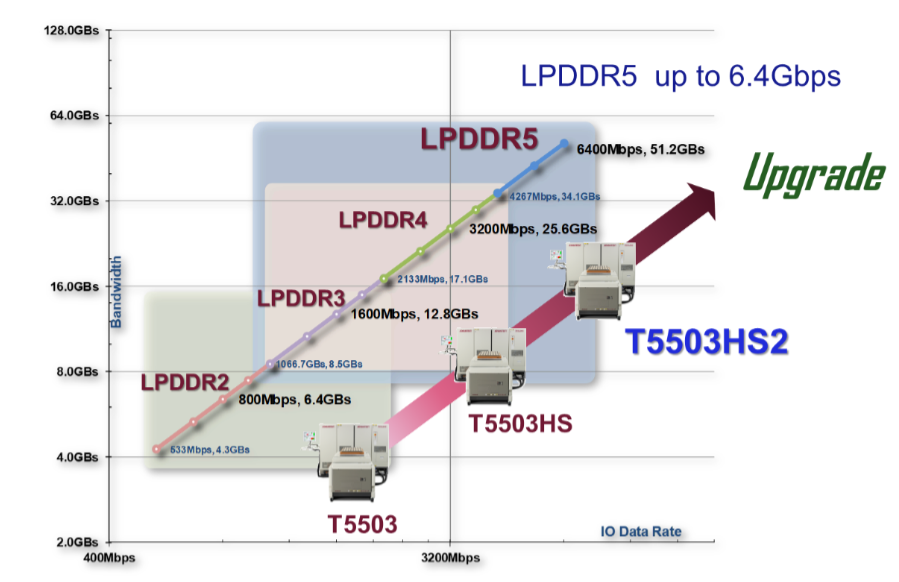

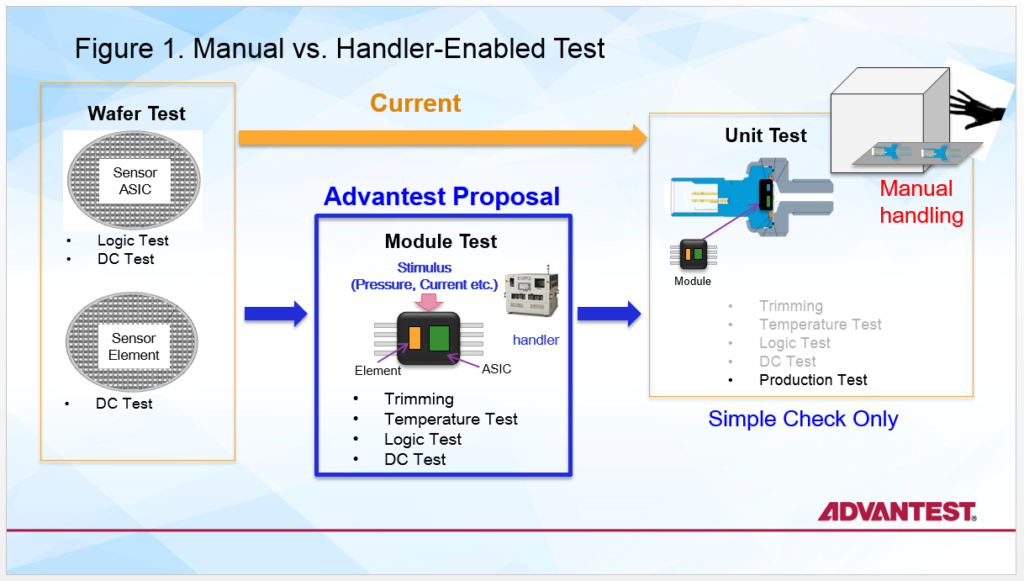

In concert with all of these developments, advances in test solutions and methodologies are helping to reduce the prices of new electronic devices and ensure their availability in sufficient volumes for mass markets. This is critical at a time when people of all kinds are benefiting from their close connections with technology.

Certainly, securing our private lives, our finances and our communication platforms from identity theft has become a key concern. Even so, the growth in human/machine interactions is highly promising. Our abilities to enjoy active lifestyles, drive vehicles and even keep our communities safe all can be enhanced by the use of electronic devices available today. Emerging semiconductor technologies can take us even further.

Judy Davies, VP Global Marketing Communications

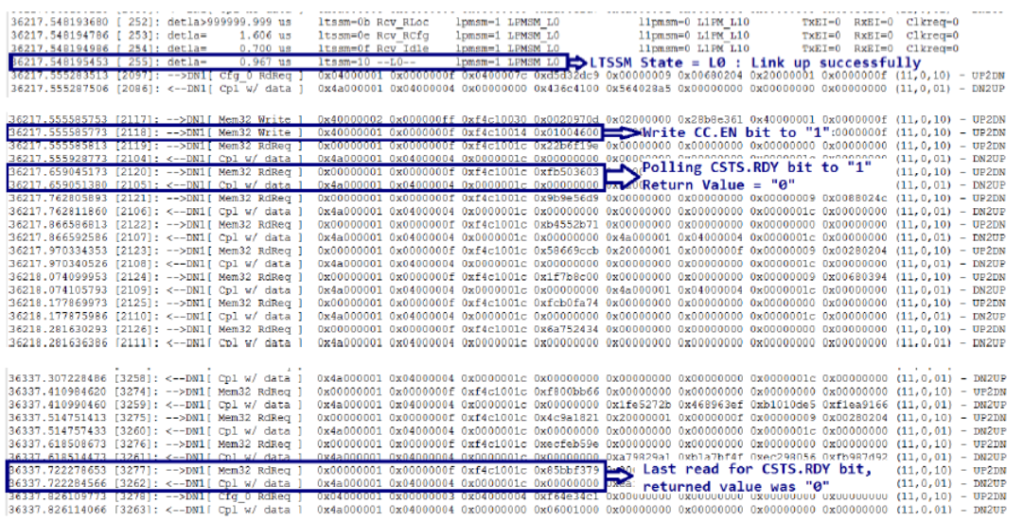

Figure 3. The highlighted lines of code indicate that the SSD never got ready.

Figure 3. The highlighted lines of code indicate that the SSD never got ready.